Table of Contents

The Government of Canada aims to publish data in an open format and provide people with easy and reliable access to data, all while reducing barriers to entry, promoting innovation and eliminating unnecessary costs for businesses using the data.

Data is presented in different formats. This document contains information, tips and suggestions on how to use some of the formats presented in the Open Data Portal as well as how to work with some of the application programming interfaces (APIs). For general information on working with open data, please visit our Open Data 101 page.

Structured Data

Structured data is usually in the form of text but follows a specific format giving it machine readability. Some more complex formats may be in binary format such as maps and GIS related files. You will find many different formats in the Open Data Portal. This section covers some of the popular formats and provides some tips on how to work with them.

CSV

The easiest method for working with a Comma-Separated Values (CSV) file is to use a spreadsheet application such as OpenOffice Calc or Microsoft Excel. Simply launch the application and open the .csv file to launch the application’s CSV import wizard.

Character Encoding

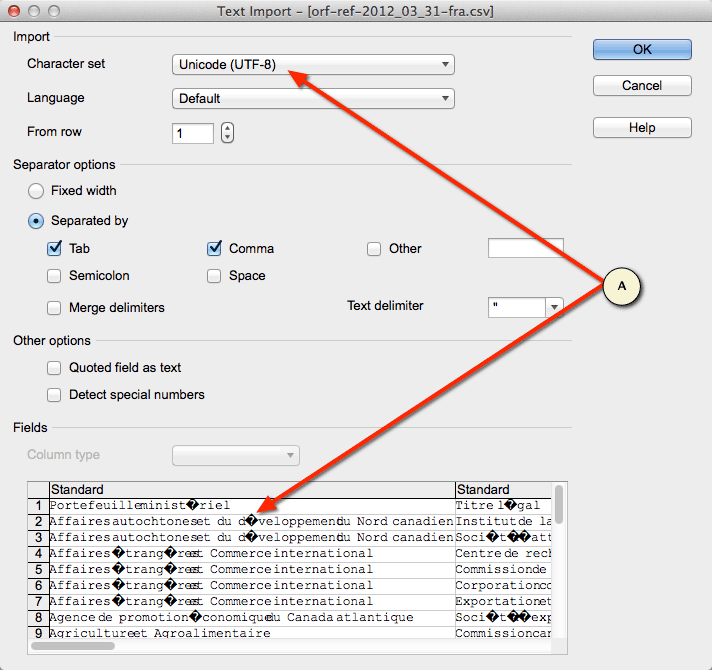

One important detail is to pay attention to Character Encoding which can affect how the data, mainly the text is parsed during the import. This will be more noticeable when dealing with languages such as French which contain extended characters.

OpenOffice provides easy selection of the character encoding to be used. Figure 1 and Figure 2 illustrate an example where the downloaded CSV dataset file was encoded using a different format than the default UTF–8 for OpenOffice (illustration using NeoOffice).

Figure 1: Example of a CSV import wizard showing incorrect encoding

Accessible description of Figure 1

Screenshot showing the comma-delimited file import wizard in Neo Office. The file being imported contains French extended characters. The user has selected UTF-8 as the character encoding. The preview area is displaying the characters incorrectly as it is not the proper character encoding for this file.

Figure 2: A CSV file imported using the incorrect character encoding

Accessible description of Figure 2

A comma-delimited file displayed in the Neo Office spreadsheet editor. The file contains French extended characters which were not properly imported due to using the incorrect character encoding.

Another common 8-bit character encoding in Canada is ISO–8859–1. In this scenario, selecting the ISO–8859–1 character encoding resolves the extended character issue as shown in Figure 3 and Figure 4.

Figure 3: A CSV import wizard showing proper character encoding

Accessible description of Figure 3

Screenshot showing the comma-delimited file import wizard in Neo Office. The file being imported contains French extended characters. The user has selected the appropriate ISO-8859-1 encoding and the preview area is displaying the characters properly.

Figure 4: A CSV file imported into NeoOffice using the proper character encoding

Accessible description of Figure 4

A comma-delimited file displayed in the Neo Office spreadsheet editor. The file contains French extended characters which were properly imported using the correct character encoding.

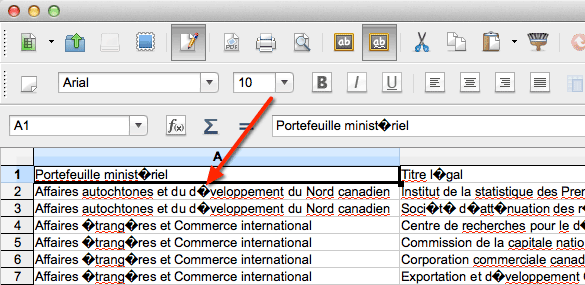

You may notice character encoding issues when opening a CSV file in Microsoft Excel as shown in Figure 5. For this example, we will be using Microsoft Excel in Microsoft Office Professional Plus 2010.

Figure 5 - File opened in Excel using incorrect file encoding

Accessible description of Figure 5

A screenshot of a comma-delimited file opened in Excel showing incorrectly decoded French characters.

The character encoding can be manually selected using the data import wizard. The first step is to start with a new Excel workbook. Go to the Data tab and click on From Text which will launch the import wizard (Figure 6).

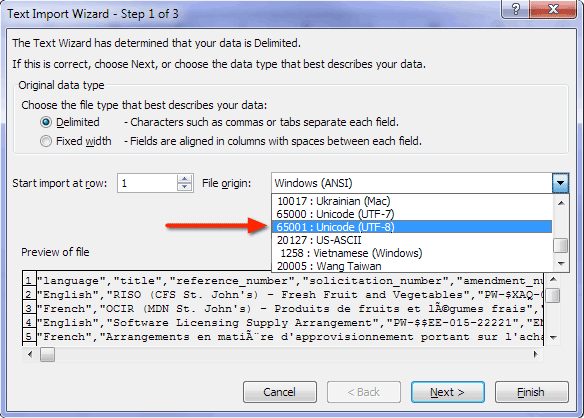

The first step in the wizard is to select the appropriate encoding. In this example, we are selecting the UTF-8 encoding which is located near the bottom of the list. Once selected, you can preview the character decoding in the data preview pane of the wizard.

Figure 6 - Selecting UTF-8 in Text Import Wizard

Accessible description of Figure 6

A screenshot showing Step 1 of 3 of the Text Import Wizard in Microsoft Excel. The file origin list box is open with Unicode UTF-8 selected.

The next step (Figure 7) allows you to specify the proper delimiter(s) used in the data file. Once again, the Data preview pane allows you to preview your data and see if it is parsing the data correctly.

Figure 7 - Selecting delimiters in Text Import Wizard

Accessible description of Figure 7

A screenshot showing Step 2 of 3 of the Text Import Wizard in Microsoft Excel. The Comma delimiter is checked and the data preview pane is showing the data separated into columns.

Optionally, you can specify the data types for each of the columns (Figure 8). In most cases, simply clicking Finish will suffice.

Figure 8 - Selecting data formats in Text Import Wizard

Accessible description of Figure 8

A screenshot showing Step 3 of 3 of the Text Import Wizard in Excel. This step has settings allows the user to set the data formats for each of the columns. The defaults are used for this example.

Figure 9 shows the characters properly decoded in Excel having used the Text Import Wizard.

Figure 9 - Data imported into Excel using proper character encoding

Accessible description of Figure 9

A screenshot showing a comma-delimited file imported into Excel using the Text Import Wizard. The French characters are shown properly having applied the appropriate character encoding.

Byte Order Mark (BOM)

You may discover an interesting special byte at the beginning of a file. This byte is called the Byte Order Mark or BOM. This marker is used to signal the size of the character encoding used in the UTF encoded file. For example, UTF–16 will render as U+FEFF (Figure 5) or þÿ in a text editor capable of displaying this value. In general, you do not need to concern yourself with the BOM as it is used by the application you are using. However, it can be a useful byte to help you select the correct file encoding during an import if you are having issues.

Figure 10: A Byte Order Mark in a data file

Accessible description of Figure 10

A Byte Order Mark displayed in a text editor.

JSON

JavaScript Object Notation (JSON) is a structured open data format that is lightweight and very well suited for mobile applications. It is used either natively or as a library available in most web and mobile development languages. Detailed information on JSON can be found on the official JSON web site. Here is a simple example of JSON data:

"metadata":{

"request":{

"dateCreated":"2014-01-01T00:00:00+00:00",

"name": "Example JSON Dataset" },

"items":[

{ "name":"Item 1", "colour":"red" },

{ "name":"Item 2", "colour":"blue" }

]

}

We have lots of great examples of working with JSON data and RESTful APIs in various programing languages.

XML

XML (eXtensible Markup Language) defines a set of rules for encoding documents in machine-readable form. It is defined in the XML 1.0 Specification.

Geo Formats

GeoTIFF

GeoTIFF files are similar to standard graphical TIFF files; they are viewable using a regular image viewer that supports the TIFF format. However, GeoTIFF files contain extra metadata related to the geographical nature of the image. Refer to the official sites on GeoTIFF for additional information on the format:

An open source library exists to enable your program the ability to extract the metadata information from a GeoTIFF. Below is an example on how to install and run a simple C program to extract metadata information.

Here we are using the Homebrew package manager on OSX to install the GeoTIFF library and use a utility to read a GeoTIFF:

$ brew install libgeotiff $ listgeo <filename>.tif

Once the GeoTIFF library is installed, you should be able to compile a simple C program to read the metadata:

/**

* Simple example of a C program to read metadata from a GeoTIFF.

*

* NOTE: This is a simple example with very little error handling.

*

* @license http://data.gc.ca/eng/open-government-licence-canada

*/

#include <stdio.h>

#include <stdlib.h>

#include <xtiffio.h>

#include <geotiffio.h>

#include <geokeys.h>

int main(void) {

TIFF *tif = (TIFF*)0; // TIFF-level descriptor

GTIF *gtif = (GTIF*)0; // GeoKey-level descriptor

double major_axis_key = 0;

// Open TIFF file

tif = XTIFFOpen("geo.tif", "r");

if (!tif) {

printf("Unable to open GeoTIFF file.\n");

exit(-1);

}

// Open GTIF Key parser; keys will be read at this time.

gtif = GTIFNew(tif);

if (!gtif) {

printf("Unable to access GTIF metadata.\n");

exit(-1);

}

// Read a GTIF Key pair

if (GTIFKeyGet(gtif, GeogSemiMajorAxisGeoKey, &major_axis_key, 0, 1)) {

printf("GeogSemiMajorAxisGeoKey: %f\n", major_axis_key);

} else {

printf("Unable to locate GeogSemiMajorAxisGeoKey\n");

}

// Clean up and exit.

GTIFFree(gtif);

XTIFFClose(tif);

return 1;

}

Output:

$ ./geotiffmeta GeogSemiMajorAxisGeoKey: 6378137.000000

Shapefiles

ESRI Shapefiles (SHP) are popular geospatial vector data files which are accompanied by a series of files necessary to use the SHP file. As listed on Wikipedia, the following is usually packaged into a compressed ZIP file:

Mandatory files:

- .shp — shape format; the feature geometry itself

- .shx — shape index format; a positional index of the feature geometry to allow seeking forwards and backwards quickly

- .dbf — attribute format; columnar attributes for each shape, in dBase IV format

Optional files:

- .prj — projection format; the coordinate system and projection information, a plain text file describing the projection using well-known text format

- .sbn and .sbx — a spatial index of the features

- .fbn and .fbx — a spatial index of the features for shapefiles that are read-only

- .ain and .aih — an attribute index of the active fields in a table

- .ixs — a geocoding index for read-write shapefiles

- .mxs — a geocoding index for read-write shapefiles (ODB format)

- .atx — an attribute index for the .dbf file in the form of shapefile.columnname.atx (ArcGIS 8 and later)

- .shp.xml — geospatial metadata in XML format, such as ISO 19115 or other XML schema

- .cpg — used to specify the code page (only for .dbf) for identifying the character encoding to be used

Source: Shapefile - Wikipedia.org

Links to useful tools:

Converting Shapefiles to GeoJSON

There is a great write-up by Ben Balter on converting Shapefiles to GeoJSON using gdal’s ogr2ogr utility as follows:

ogr2ogr -f GeoJSON -t_srs crs:84 [name].geojson [name].shp

Application Programming Interfaces

What is an API?

An Application Programming Interface (API) is a broad term. In the context of Government of Canada’s Data Portal, it refers to internet connected software interfaces that provide access to open data. In most cases, these interfaces will use the HTTP protocol. APIs provide on-demand access to large, timely or complex data allowing developers to mash data from multiple sources and create new views on information through applications or visualizations.

For more information on how to work with or build an API please see the following videos:

- "What is an API?" for federal government by GSA

- API Webinar Series: Learn How to Use and Create APIs

- Créer & comprendre une API (available in French only)

Open Government APIs

The Government of Canada is working on releasing APIs that will:

- create economic opportunity, or public or private innovation;

- relate to data commonly requested by Canadians (via email, suggested data sets, round table discussions, consultations, and ATI requests);

- improve accountability and responsiveness;

- increase public knowledge of the Government of Canada and its operations;

- further the core mission of departments and agencies; and

- provide insight related to student and youth issues (e.g. Canada student loan data).

You can access the data.gc.ca dataset registry through our API.

Connecting to an API

In general, APIs are intended for developer use. This section will cover examples using various tools and programming languages. Consider searching the Open Data Portal for datasets if you do not have any programming experience.

Using cURL

cURL is a command-line tool used to transfer data from or to a server over various TCP/IP protocols. In this case, we will review how to use cURL to request data from an HTTP RESTful API. cURL can be installed on Windows, OSX or Linux distributions.

Windows

OSX

- via Macports;

- via Homebrew; or

- Download cURL

Linux

- A package manager such as YUM, APT-GET or the GUI software package installer; or

- Download cURL.

Once installed, cURL should be accessible from a command-line prompt by simply typing curl. Typing curl will not yield anything useful other than confirm ou have it installed. At a minimum, you need to specify the destination URL.

You may also need to provide some parameters including HTTP headers to successfully query an API. In our example, the API recognizes the Accept and Accept-Language headers.

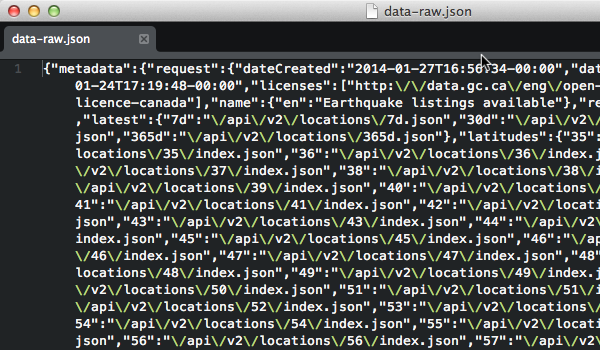

curl -H "Accept: application/json" -H "Accept-Language: en" \ "http://www.earthquakescanada.nrcan.gc.ca/api/v2/locations/" > data-raw.json

By default, cURL will output the response from the destination onto the console screen (STDOUT). The above example redirects the output to a file named data-raw.json which will contain the English dataset in JSON format.

Figure 1 - A raw JSON response

Accessible description of Figure 1

A screenshot of a JSON response file in its raw minified format.

In most cases, the response will be optimized (minified) to reduce the data transfer size (Figure 6). You can use another command-line tool such as jsonpp to format the data into a human-readable output (Figure 7).

Figure 2 - A "pretty print" formatted JSON response

Accessible description of Figure 2

A screenshot of a JSON response file prettified for human readability.

Using Browser Plugins

The majority of browsers have plugins available which are tools to interact with RESTful APIs. These tools allow you to set parameters, headers, fetch a response and visualize the data. These can be very useful tools to quickly explore API data. Search your browser’s plugin repository to see what plugins are available for you.

Using Programming Languages

Most modern programming languages have the capabilities of accessing network-based resources such as HTTP-based services either natively or through libraries. We will cover some simple examples of how to get started using some common languages used for web and mobile development to access a RESTful API.

The sample programs below will each demonstrate how to query an API and parse the JSON response. The response is parsed to display the name of the result set and iterate over one of the result groups.

PHP

The following PHP example uses the cURL:

<?php

/**

* Simple example of a PHP script used to query an API.

*

* NOTE: This is a simple example without any error handling.

*

* @license http://data.gc.ca/eng/open-government-licence-canada

*/

// Request URL

$url = 'http://www.earthquakescanada.nrcan.gc.ca/api/earthquakes/';

// Request HTTP headers

$headers = array(

'Accept: application/json',

'Accept-Language: en'

);

// Initialize the cURL object

$cu = curl_init($url);

// Set the cURL options

curl_setopt($cu, CURLOPT_RETURNTRANSFER, TRUE);

curl_setopt($cu, CURLOPT_HTTPHEADER, $headers);

// Run the cURL request

$response = curl_exec($cu);

// Properly close the cURL object

curl_close($cu);

// Display response data

if ($response) {

$json = json_decode($response);

echo "{$json->metadata->request->name->en}\n";

foreach($json->latest as $key=>$value) {

echo "{$key} -> {$value}\n";

}

}

Output:

Earthquake listings available 7d -> /api/v2/locations/7d.json 30d -> /api/v2/locations/30d.json 365d -> /api/v2/locations/365d.json

Python

In Python, two libraries are required; the json and requests libraries which are used in the example below:

#!/usr/local/bin/python

# coding=utf-8

"""

Simple example of a Python script used to query an API.

@note: This is a simple example without any error handling.

@license: http://data.gc.ca/eng/open-government-licence-canada

"""

import json

import requests

url = 'http://www.earthquakescanada.nrcan.gc.ca/api/earthquakes/'

options = { "Accept":"application/json", "Accept-Language":"en" }

response = requests.get(url, headers=options)

jdata = response.json()

print jdata['metadata']['request']['name']['en']

for (key, value) in jdata['latest'].items():

print key, "->", value

Output:

Earthquake listings available 365d -> /api/v2/locations/365d.json 7d -> /api/v2/locations/7d.json 30d -> /api/v2/locations/30d.json

JavaScript jQuery

JavaScript executed within a browser is bound by security restrictions around connecting to 3rd party web sites. By default, remote queries are denied by browsers as it is considered a Cross-Site Scripting (XSS). Remote queries are permitted if the API response contains an appropriate Access-Control-Allow-Origin HTTP header allowing foreign queries. The following example connects to an API using the jQuery library.

<!DOCTYPE html>

<html lang="en">

<head>

<title>Demo API call using jQuery</title>

</head>

<body>

<div id="short"></div>

---

<div id="long"></div>

<script src="//ajax.googleapis.com/ajax/libs/jquery/1.10.2/jquery.min.js"></script>

<script>

/**

* Simple example of a jQuery JavaScript used to query an API.

*

* NOTE: This is a simple example with very little error handling.

* @license: http://data.gc.ca/eng/open-government-licence-canada

*/

(function( $ ) {

$(document).ready(function() {

// Shorthand method

$.getJSON('http://www.earthquakescanada.nrcan.gc.ca/api/earthquakes/',

function(json) {

$('#short').append(json.metadata.request.name.en + '<br />');

$.each(json.latest, function(idx, item) {

$('#short').append(idx + ' -> ' + item + '<br />');

});

return;

}

);

// Expanded method

$.ajax({

url: 'http://www.earthquakescanada.nrcan.gc.ca/api/earthquakes/',

dataType: 'json',

headers: {

'Accept' : 'application/json',

'Accept-Language' : 'fr'

},

crossDomain: true,

type: 'GET',

success: function(json) {

$('#long').append(json.metadata.request.name.fr + '<br />');

$.each(json.latest, function(idx, item) {

$('#long').append(idx + ' -> ' + item + '<br />');

});

return;

},

error: function(req, status, error) {

$('#long').append(

status.toString() + ': ' +

error.toString()

);

return;

},

});

});

})( jQuery );

</script>

</body>

</html>

Output:

Earthquake listings available 7d -> /api/v2/locations/latest/7d.json 30d -> /api/v2/locations/latest/30d.json 365d -> /api/v2/locations/latest/365d.json --- Annonces de séismes disponibles 7d -> /api/v2/locations/latest/7d.json 30d -> /api/v2/locations/latest/30d.json 365d -> /api/v2/locations/latest/365d.json

Java

There are several 3rd party libraries for Java SE which allow you to easily work with JSON data. For this example, we are using the JSON.org library which is also available via Maven.

package com.tbs.devcorner.simple;

/**

* Simple example of a Java program used to query an API.

* @license http://data.gc.ca/eng/open-government-licence-canada

*

* Maven Dependency:

* <dependency>

* <groupId>org.json</groupId>

* <artifactId>json</artifactId>

* <version>20131018</version>

* </dependency>

*/

import java.io.*;

import java.net.*;

import org.json.*;

public class App

{

public static void main( String[] args )

{

try {

// Build Connection

URL api_url = new URL("http://www.earthquakescanada.nrcan.gc.ca/api/earthquakes/");

URLConnection api = api_url.openConnection();

// Set HTTP Headers

api.setRequestProperty("Accept", "application/json");

api.setRequestProperty("Accept-Language", "en");

// Get Response

JSONTokener tokener = new JSONTokener(api.getInputStream());

JSONObject jsondata = new JSONObject(tokener);

// Display API name

System.out.println(jsondata.getJSONObject("metadata")

.getJSONObject("request")

.getJSONObject("name")

.get("en").toString());

// Iterate over latest links

JSONObject latest = jsondata.getJSONObject("latest");

for (Object item : latest.keySet()) {

System.out.println(item.toString() + " -> " + latest.get(item.toString()));

}

} catch (MalformedURLException e) {

System.out.println("Malformed URL");

} catch (IOException e) {

System.out.println("IO Error");

}

}

}

Output:

Earthquake listings available 7d -> /api/v2/locations/7d.json 30d -> /api/v2/locations/30d.json 365d -> /api/v2/locations/365d.json

Best Practices

Caching Data

Caching is a broad topic and can be seen at the memory, storage or communication layers by either side of a client/server. In this context, the server side is the data source and the client side is the developer or user who is trying to download the data.

Data Source Caching

Some data sources may use caching to help deal with scalability. As previously mentioned, this caching may be found at different levels within their service. This is typically transparent to end users but it is important to understand these practices to efficiently and respectfully interact with the data source.

If your requests continuously yield the same response yet you are expecting an updated/newer dataset then consider the following:

- You have verified that there is no caching occurring within your tools or framework.

- There is a reasonable expectation that the data source should return a revised response.

- You have looked at the data source’s documentation for information regarding timestamps or cached response rules.

- You have tried changing your query to see if the response differs.

- Try waiting some time to see if the data gets refreshed at a later time.

In some cases, the data source might be using a Content Delivery Network (CDN) to cache common responses. Look at the HTTP headers to see hints of a CDN or other caching mechanisms being used.

Client (Application) Side Caching

You may find optimization opportunities within your application to cache data in part or in its entirety. Caching can dramatically increase the performance of your application and deliver significant improvements to user experiences. Of course, caching on the client-side requires a balance between performance and accuracy as client-side caches can become outdated.

Error Handling

As a general rule for datasets and APIs, it is important to recognize and respectfully react to HTTP response codes. The following is a summary of some of the common HTTP response codes you may encounter.

| Code | Summary | Explanation |

|---|---|---|

| 200 | OK | Successful request |

| 301 | Moved Permanently | The requested item now resides at the following new location |

| 304 | Not Modified | The requested item has not been modified since your last request for it. |

| 404 | Not Found | Nothing was found at the requested URI. |

| 40x | Client Errors | Various errors related to your request. |

| 50x | Server Errors | Various server side errors. Best to review your request and try again at a later time. |